How Similarweb mines for insights from the most unexpected places

Learn about their novel data sources and how to apply the principles at a smaller scale

If data is the new oil, Similarweb has taught me to treat everything online as an oil deposit. Every webpage is a seabed; your goal is to become a digital oil rig and unearth the oil buried deep down.

I’ll be covering:

Their most unexpected data source and how to spot others

Why everything online could be oil deposits

How they extract data once, sell insights twice

Their most unexpected data source and how to spot others

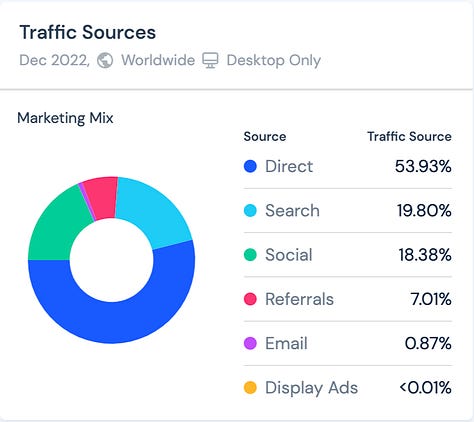

The basic gist of the Similarweb is:

Take a ton of internet traffic data

Clean and analyse it

Show insights

This helps businesses make better decisions than their competitors. Here’s what their browser extension shows.

The first thing that intrigued me was “how are they even getting all this juicy data”. I can understand how browsers and search engines could collect this. But how is Similarweb doing it?

Turns out, their method is simpler and more elegant... They have a few data sources but here’s my favourite:

“Direct Measurement – millions of websites and apps choose to share their first-party analytics with us.”

I’m sorry, wot..?

Yes, you read that right! I couldn’t for the life of me figure out why anyone would want to hand over their precious data. They’re publicising to their competitors how they’re doing; that sounded so dumb.

It actually makes a ton of sense from a publisher’s perspective though. They can submit their analytics data to verify their site to get discovered by advertisers so they can monetise their traffic.

How this could work in practice:

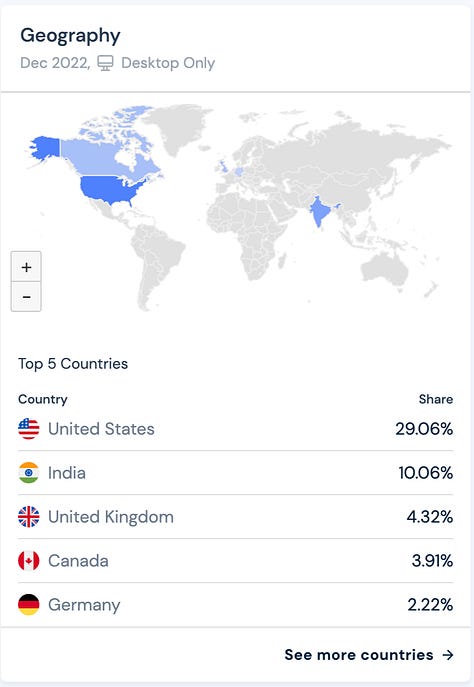

a popular ecommerce watch brand like MVMT hits product market fit

the founder decides to pour a ton of advertising fuel into their rocket ship

the founder looks across various publishers and their traffic data on Similarweb to decide who to spend their advertising budget on

Publishers like Buzzfeed verify their traffic on Similarweb and get placed #3 in the watch category list

The founder of MVMT picks Buzzfeed

This is incredible. I’m kind of amazed there aren’t more examples like this for other industries. Why doesn’t this happen for twitter accounts? Why can’t you verify your engagement for businesses to pay for sponsored posts?

Helping monetise traffic is one example, couldn’t it work for driving any kind of business value. Couldn’t this work for recruitment?

For example, software engineers prefer to work in companies where they ship quickly and they ship often. In fact, the industry even tracks this which is called DORA metrics. Wouldn’t this be useful for candidates to see which companies shipped the best when viewing their website.

So ask yourself this:

What private data could you verify for businesses to drive value for them?

Why everything online could be oil deposits

The other data source they have is “Public Data extraction” which means they index and scrape billions of websites and apps.

You’ve heard the adage: “one man’s trash is another man’s treasure” but the digital version of this is:

One company’s public data is another company’s proprietary insight

Take Similarweb’s Investor intelligence product, Sales Intelligence: it highlights technologies present on websites so salespeople can find potential leads.

How do they identify these technologies?

Every website needs to inject some code if they’re using products like helpdesk software or a customer live chat. This can inspected easily if you look at the website code. This gives a ton of insights as to what tools they’re paying for and how much they’re spending.

This changed my entire paradigm. It’s taught me to look at everything I encounter from now on as a potential data source. The trick is in figuring out what can be inferred from it. If you can infer purchasing decisions, then you’re onto a winner.

For example, job postings mention names of tools that they expect candidates to be experienced in. This makes for an awesome data source because it’s likely to mention technologies that don’t necessarily appear in the results that appear in Wappalyzer and Builtwith.

So ask yourself this:

What public data of businesses can you analyse and infer purchasing decisions

Extract data once, sell insights twice

This allows them to extract data once, but use the same data and “package“ it up as multiple “market intelligence” products.

They made a whopping $140M in revenue in 2021 and their 2022 Q3 revenue grew 41% year-over-year to $50.0 million. Here are some of the products:

investor intelligence - identifies trends and inflection points so investors so can invest in markets experiencing rapid growth

shopper intelligence - they spot promising consumer behaviours so eCommerce brands can launch products where there is a lot of demand

This is amazing when you think about it. Every subsequent market becomes easier to launch into because they can reuse the same data and the same infrastructure.

Actually, they don’t even need to extract data. Their docs mention of partnerships that provide data to them, but their wording sounds mad ominous…

Partnerships – a global network of organizations that collect “digital signals” across the Internet

WTF is “digital signals” and what exactly is a “global network of organizations”... 😰 When I hear “global network of organizations”, I think of Spectre from the James Bond movie

After some digging, I realised this is corporate speak for ‘we buy anonymised data from ISPs and free consumer software products like VPNs’.

Same data but different insights drawn for different roles

Learning about Similarweb has taught me there are so many businesses and products waiting to be built if we looked at them through the lens of data sources and data products.

If the digital signals were good for this post, please let me know by subscribing below!